In the quiet of night, as most of the world sleeps, bats take to the skies with a biological sonar system so sophisticated it puts human engineering to shame. These nocturnal navigators emit high-frequency calls and interpret the returning echoes to construct a real-time, three-dimensional map of their surroundings—a process known as echolocation. For decades, scientists have marveled at this ability, but only recently have we begun to unravel the neural algorithms that underpin it. The study of bat echolocation is not just a biological curiosity; it offers profound insights into how brains process sensory information to model the world in 3D, with implications spanning from neuroscience to robotics and artificial intelligence.

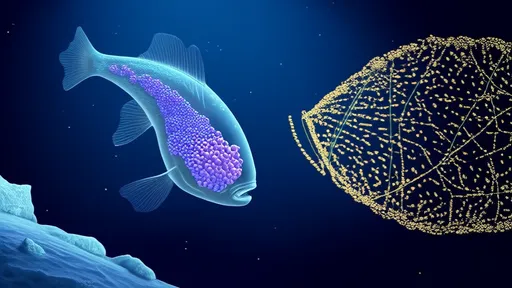

The foundation of this remarkable capability lies in the bat's auditory system. When a bat emits a call, it isn't just sending out a sound; it's launching an acoustic probe into the environment. The returning echo is packed with information: the time delay reveals distance, the shift in frequency (Doppler effect) indicates relative velocity, and the subtle differences in sound between the two ears provide cues about horizontal angle. The intensity and spectrum of the echo can even hint at the texture and material of objects. This raw data is a chaotic stream of auditory signals, yet the bat's brain seamlessly integrates it into a coherent spatial model.

At the heart of this process are specialized neurons in the bat's brainstem and midbrain that act as biological signal processors. These cells are exquisitely tuned to specific aspects of the echo. Some neurons fire only in response to very precise time delays, effectively acting as range-finders that code for distance. Others are sensitive to minute frequency shifts, functioning as velocity detectors. A third group compares the input from the left and right ears to calculate the azimuth, or horizontal angle, of a target. This division of labor is the first step in deconstructing the complex echo into manageable computational chunks.

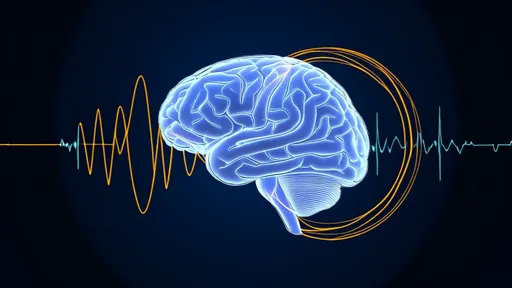

But the real magic happens when these streams of information converge in the auditory cortex. Here, the brain doesn't just process sound; it constructs a world. Research has shown that certain cortical neurons behave like 3D spatial filters. They don't merely respond to a specific delay or frequency; they fire maximally when an echo corresponds to an object in a very particular location in space—a specific combination of distance, elevation, and azimuth. It's as if each neuron represents a tiny voxel, or volume pixel, in the bat's perceptual space. The combined activity of a population of these neurons forms a dynamic neural representation of the 3D environment, updated with every call and echo.

This neural mapping is astonishingly adaptive. A bat flying at high speed through a dense forest must update its world model in milliseconds to avoid collisions and catch prey. Its brain achieves this through predictive coding. It doesn't passively wait for echoes; it generates an internal model of the expected sensory input based on its own motor commands (the act of calling) and its current flight path. When an echo returns, the brain compares it to this prediction. Deviations from the prediction—like an unexpected branch or a fluttering moth—are highlighted for rapid processing, allowing for instantaneous course corrections. This predictive mechanism is a cornerstone of efficient sensory processing, reducing the computational load by focusing resources on novel or unexpected information.

The algorithms governing this process are a masterclass in efficiency. One key strategy is active sensing. The bat doesn't use a fixed sonar pulse. It dynamically adjusts the duration, frequency, and repetition rate of its calls based on the task and environment. When cruising in open space, it might use long, low-repetition-rate calls to survey large areas. But when closing in on a insect, it shifts to a rapid terminal buzz—a barrage of ultra-short calls that provides a high-resolution, high-update-rate stream of data for the final strike. This adaptive sampling means the brain is never overwhelmed with redundant data; it requests information on a need-to-know basis.

Furthermore, the brain employs noise-cancellation algorithms remarkably similar to those in modern headphones. The outgoing call is incredibly loud—often over 130 decibels—yet the bat must hear the faint returning echo without being deafened by its own voice. To solve this, the brain sends a copy of the motor command for the call to the auditory system. This "efference copy" acts as a cancellation signal, suppressing the neural response to the self-generated sound while leaving sensitivity to external echoes intact. This allows the bat to operate its powerful biological sonar without auditory self-sabotage.

The implications of understanding these neural algorithms extend far beyond the world of bats. Engineers and computer scientists are looking to bat neurobiology for inspiration in solving complex problems in autonomous navigation and machine perception. The robust, low-power, and real-time 3D modeling performed by a gram of bat brain is a gold standard for developing algorithms for self-driving cars, drones, and submersibles. These systems must also navigate complex, dynamic environments using sensors like lidar and radar, which face similar challenges of echo interpretation, noise filtering, and rapid data integration.

In the field of robotics, biomimetic sonar systems are being developed that mimic the bat's active sensing strategy. Instead of constantly emitting signals, these systems use adaptive pulse sequences, changing their sampling strategy based on what they "see," thereby conserving energy and computational power. The predictive coding models observed in bats are also being incorporated into AI to create more efficient and context-aware perception systems. These AIs learn to predict sensory input, allowing them to focus processing power on anomalies and changes, leading to faster and more efficient decision-making.

Perhaps the most profound impact is in neuroscience itself. The bat serves as a fantastic model organism for understanding how the brain represents space. The discovery of spatial neurons in the bat cortex—cells that encode 3D coordinates—complements the famous "place cells" and "grid cells" found in the hippocampi of rodents navigating in 2D. Studying how the bat brain builds a volumetric map provides a more complete picture of the neural basis of navigation and could offer clues into human spatial disorders.

In conclusion, the humble bat, often misunderstood and maligned, is a flying testament to the power of evolutionary innovation. Its brain executes a real-time 3D modeling algorithm of breathtaking sophistication, all while consuming less power than a small light bulb. By eavesdropping on the neural conversations that guide a bat's flight, we are not only unlocking the secrets of a remarkable animal but also gleaning fundamental principles of computation, perception, and intelligence. The echoes returning from the dark hold lessons that are illuminating the path toward a new generation of intelligent technology, all inspired by the silent, sonar-guided flight of the night.

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025

By /Aug 21, 2025